End-To-End Information Processing

FloodTags analyzes and processes water-related information from a large number of online sources, and disseminates the results of these analyses through a dashboard and an API. Our systems are developed in close collaboration with both academic and non-academic partners and feature state-of-the-art machine learning and natural language processing algorithms. In this section we present some of the most important features of the software.

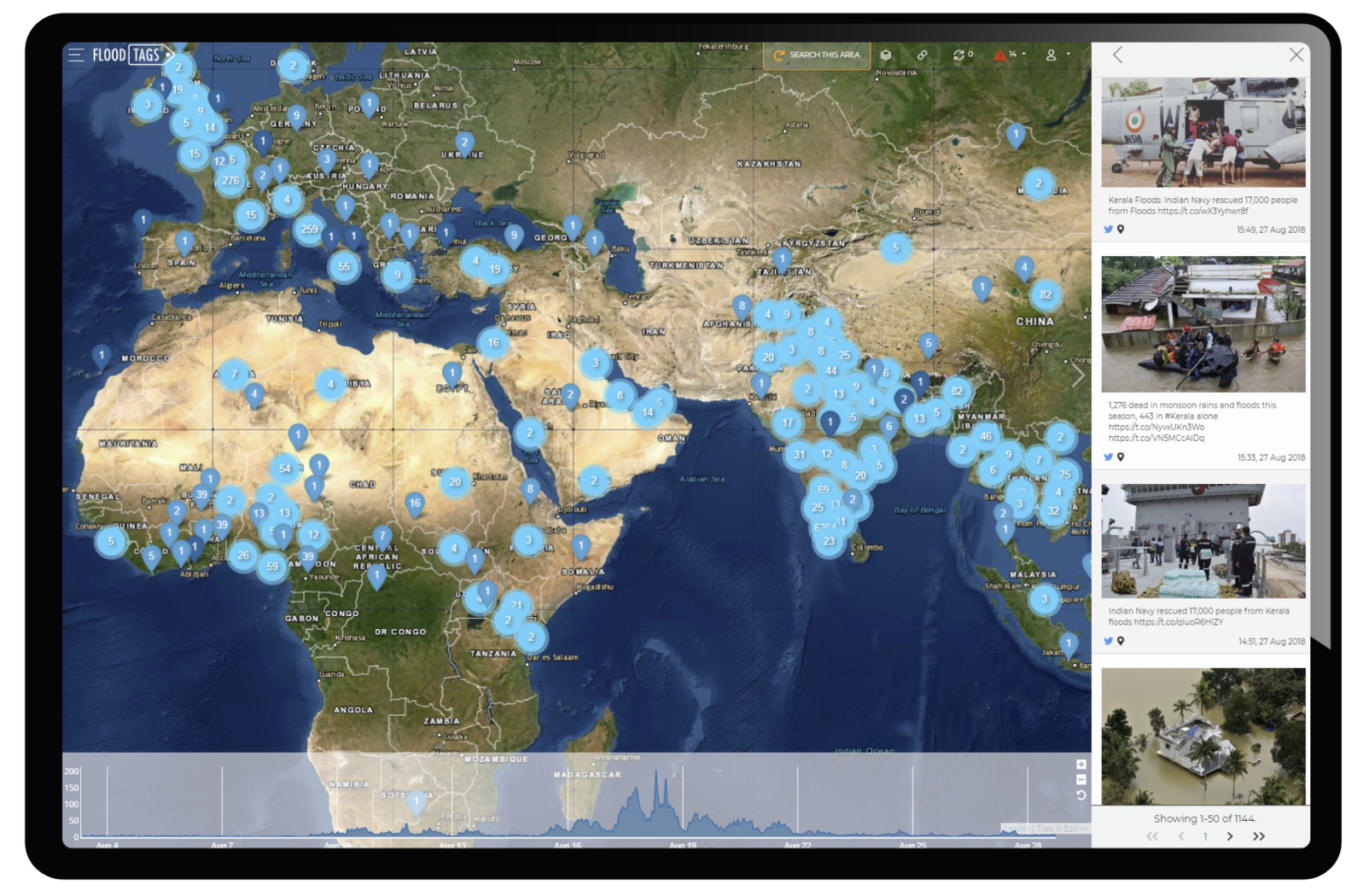

Online Media Dashboard

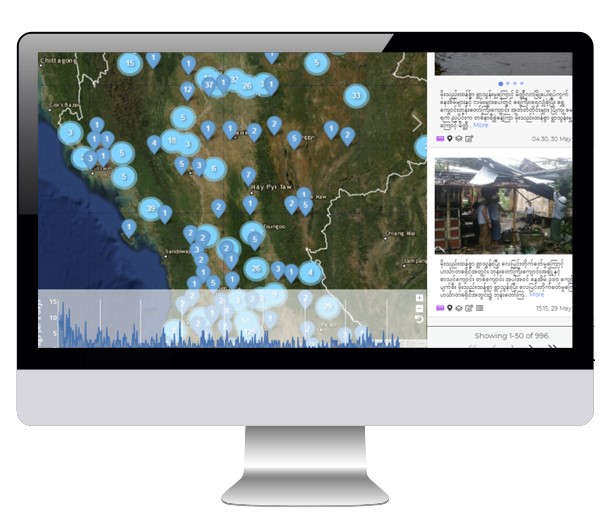

In the FloodTags Dashboard you can access and explore all the available media data, both real-time as well as for past events. It allows you to quickly understand a current situation, while you have different options to dig deeper into the data. The website consists of map elements that show the georeferenced data, graphs with the statistics and several side menu's that hold individual tags, events and various statistics. You can search the data via a query function and filter the data by geographic area, topic relatedness and relevance indicators. The dashboard is available for PC as well as mobile and can be accessed from any mainstream platform.

Online Media Dashboard

In the FloodTags Dashboard you can access and explore all the available media data, both real-time as well as for past events. It allows you to quickly understand a current situation, while you have different options to dig deeper into the data. The website consists of map elements that show the georeferenced data, graphs with the statistics and several side menu's that hold individual tags, events and various statistics. You can search the data via a query function and filter the data by geographic area, topic relatedness and relevance indicators. The dashboard is available for PC as well as mobile and can be accessed from any mainstream platform.

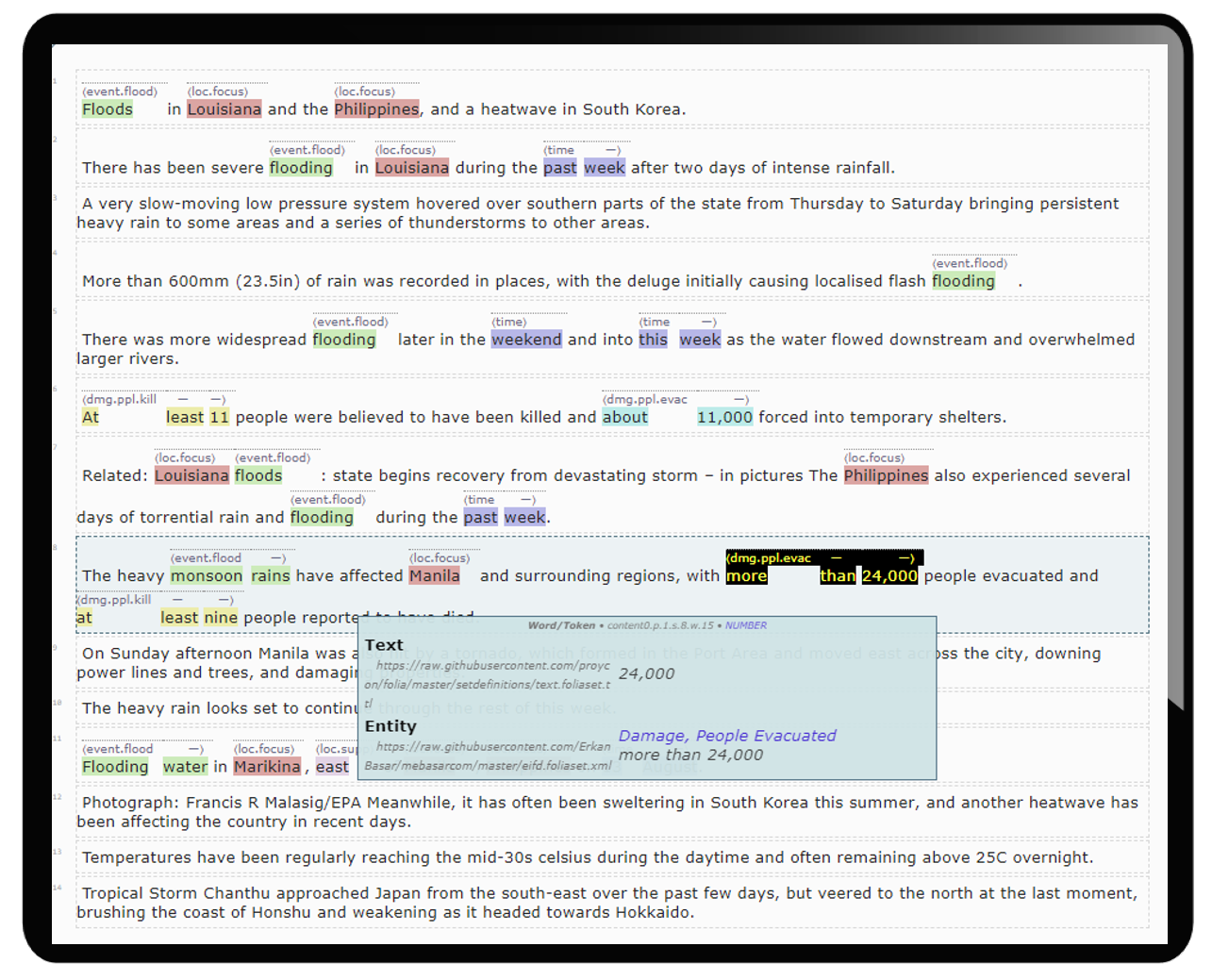

Information Extraction (IE)

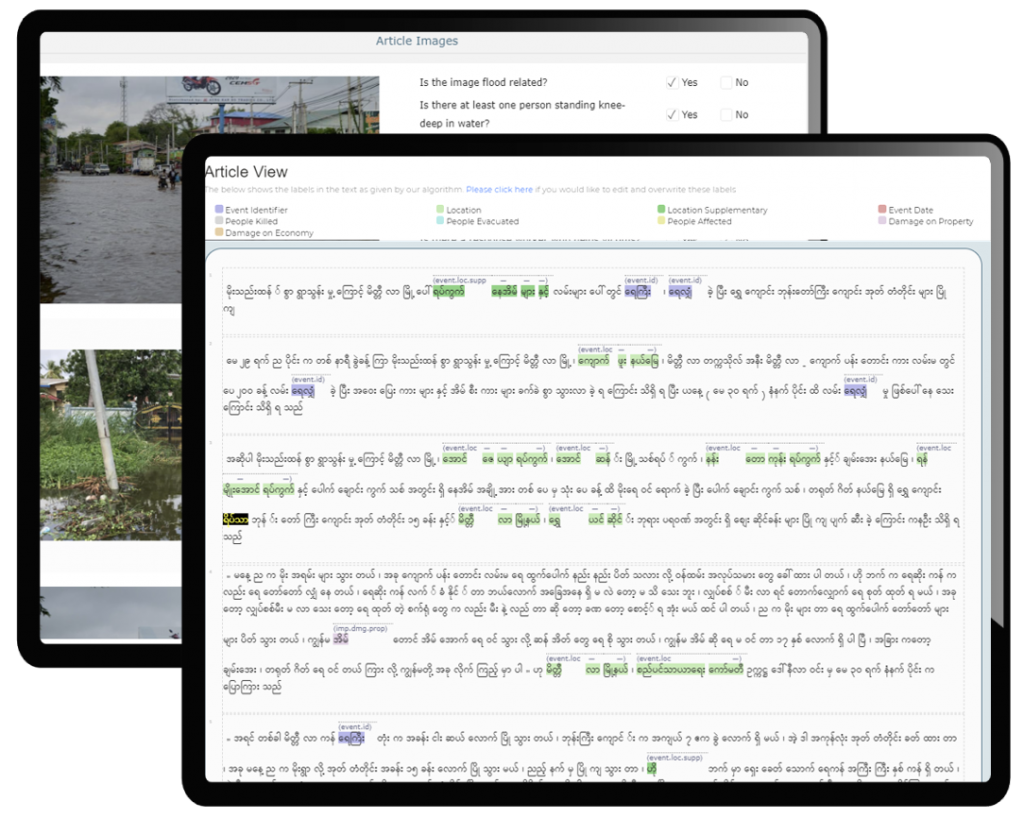

We use supervised machine learning to create classifiers and extraction algorithms to extract information from the text and images in the media data. For micro-text such as Twitter, we focus on use statistical anomalies in combination with natural language processing. For longer text volumes, such as news articles, we use a more elaborate process of machine learning to extract information such as the location and start-time of an event and various properties of an event such as number of affected people, evacuees, casualties and damage quantities. As part of the classification we sometimes request our Clients to join the our annotation process via a fully supported website (labelling content to determine what is relevant for your specific use-case).

Application Programming Interface

Integrate media data in your desired software by using our API. This way you can aggregate, display and post-process media data in your own environment, or even use media data for real-time model calibration and validation. Our API already connects to several web portals, such as the Philippine Red Cross Operational Dashboard (where they receive event notifications) and to analysis tools such as Delft-FEWS (allowing for real-time integration of FloodTags data with other operational water management information). Integrations with remote sensing software are planned. Drop us a message and we can discuss the possibilities a connection to your software.

Delft-FEWS with measured waterlevels (graph) compared to tweets (map) in Jakarta (by Arnejan van Loenen of Deltares)

Application Programming Interface

Integrate media data in your desired software by using our API. This way you can aggregate, display and post-process media data in your own environment, or even use media data for real-time model calibration and validation. Our API already connects to several web portals, such as the Philippine Red Cross Operational Dashboard (where they receive event notifications) and to analysis tools such as Delft-FEWS (allowing for real-time integration of FloodTags data with other operational water management information). Integrations with remote sensing software are planned. Drop us a message and we can discuss the possibilities a connection to your software.

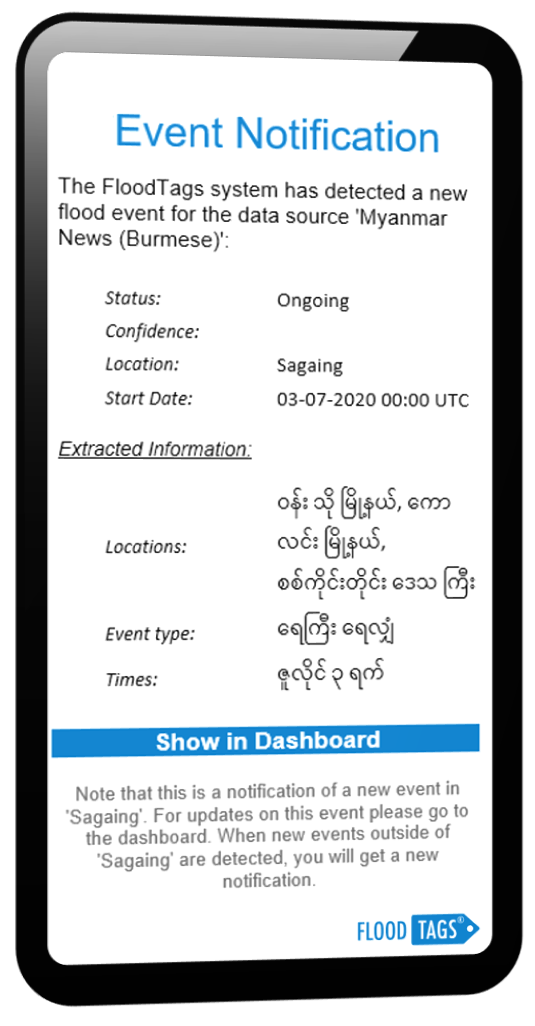

Notification System

You can subscribe to notifications that alert you of any new events. The alert notifications contain the information that was extracted using the IE of the system, such as location, timing and impact data. Also we include the confidence level, as some events are determined with higher confidence than others, for instance because they are based on more observations. Finally the alert contains a hyperlink to the original data in the Dashboard. The notifications are sent via email, WhatsApp or Telegram. The frequency of notifications upon event detection can be changed in the settings page.

Data Sources

FloodTags sources from any online media data that is publicly shared online. This includes social media, news articles, blog posts, forum posts, YouTube videos and we are currently working on internet radio mining. For specific cases we can also use data from Facebook and Instagram, however more restrictive terms apply. Besides monitoring these sources in real-time, for some sources we acquire historic data, to be able to analyse past events in detail. In addition, we collect chat group information, with the explicit permission of its members. More about this under 'Chatbot'.

All data comes together in the FloodTags' software. We are currently processing data in more than fifteen languages, including non space-separated languages such as Burmese and Cambodian.

Data Sources

FloodTags sources from any online media data that is publicly shared online. This includes social media, news articles, blog posts, forum posts, YouTube videos and we are currently working on internet radio mining. For specific cases we can also use data from Facebook and Instagram, however more restrictive terms apply. Besides monitoring these sources in real-time, for some sources we acquire historic data, to be able to analyse past events in detail. In addition, we collect chat group information, with the explicit permission of its members. More about this under 'Chatbot'.

All data comes together in the FloodTags' software. We are currently processing data in more than fifteen languages, including non space-separated languages such as Burmese and Cambodian.

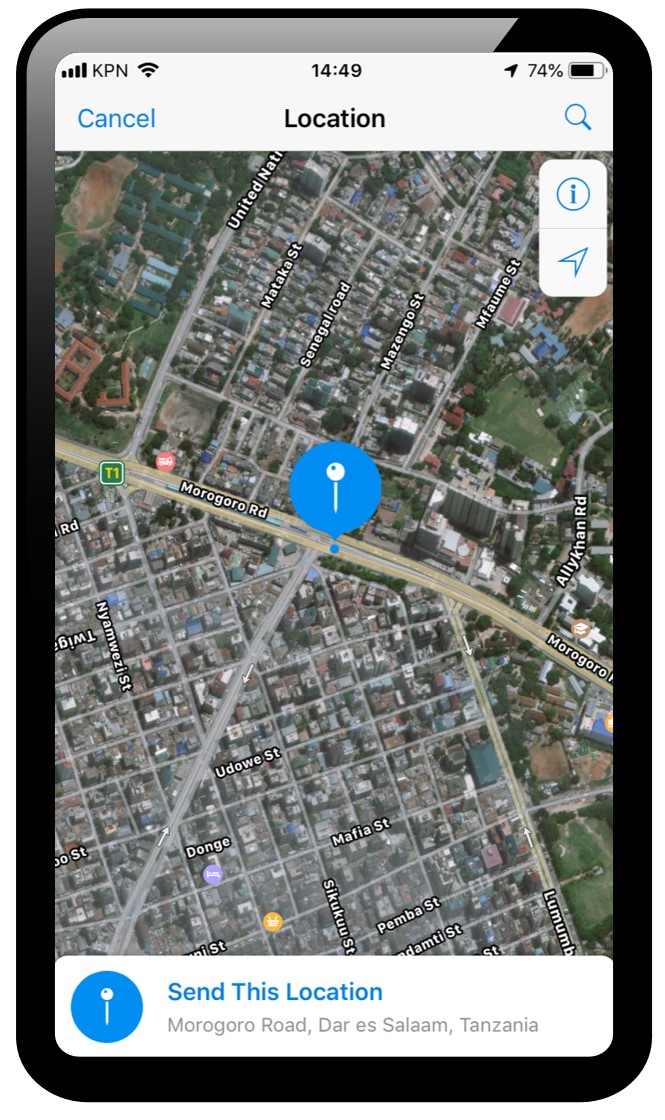

Georeferencing

FloodTags adds georeferences to the data, so it can be viewed on a map. After extracting various location information from the text by our IE process, we match it with our geonames database and determine the final location using an optimisation algorithm. For instance, ambiguous location names that refer to multiple locations, are resolved by weighing the alternatives based on proximity to any other locations mentioned in the message. Also we use parent/ child relationships between the locations in our database to derive various levels of detail, arriving at the most detailed locations possible.

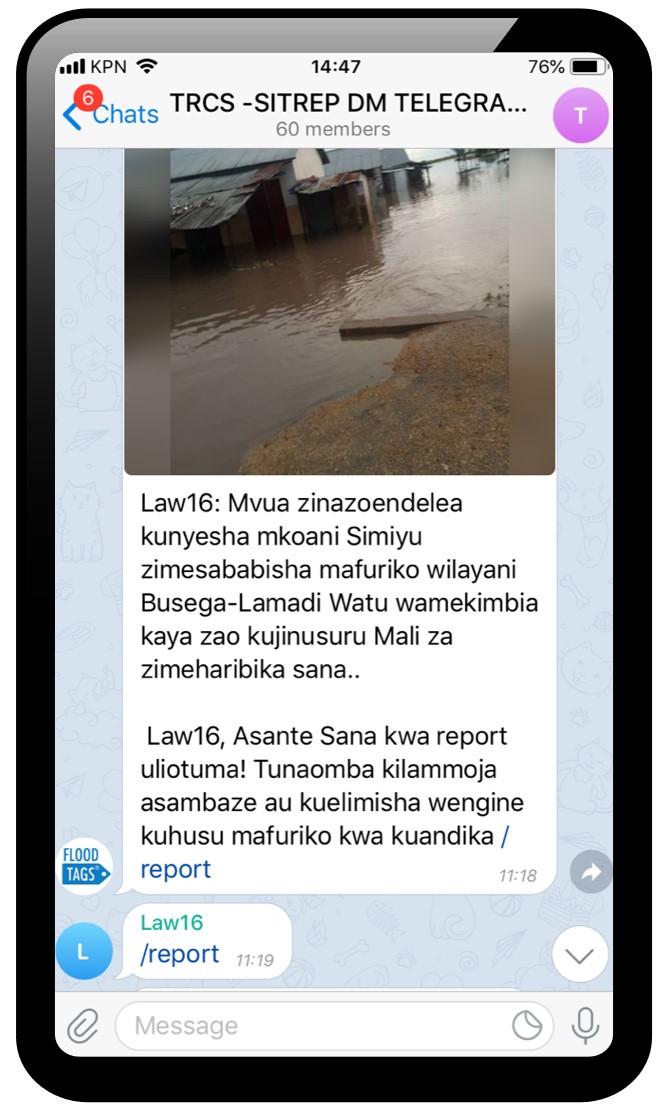

Chatbots

Messengers like WhatsApp and Telegram have found their way into water and disaster management, without any preset plan. Within only a few years, they are now widely used in the disaster management execution, an important source of information during floods. But there are downsides, information overload and integration problems to view information in other software. This is hampering the process to get a quick and integrated overview of a situation.

FloodTags developed a chatbot that, with explicit permission of its members, can be used to structure event information shared in chat groups. This helps organisations get a good and quick overview of a situation. In addition, using our optional FloodTags bot, group members can receive information and share ground observations via WhatsApp and Telegram (via two-way communication). This information will then be connected and visible in the FloodTags' Dashboard and API.

Chatbots

Messengers like WhatsApp and Telegram have found their way into water and disaster management, without any preset plan. Within only a few years, they are now widely used in the disaster management execution, an important source of information during floods. But there are downsides, information overload and integration problems to view information in other software. This is hampering the process to get a quick and integrated overview of a situation.

FloodTags developed a chatbot that, with explicit permission of its members, can be used to structure event information shared in chat groups. This helps organisations get a good and quick overview of a situation. In addition, using our optional FloodTags bot, group members can receive information and share ground observations via WhatsApp and Telegram (via two-way communication). This information will then be connected and visible in the FloodTags' Dashboard and API.

Image Analysis

Together with our partners we developed machine learning algorithms that detect various event related features from photo's. We tested the image processing most prominently on flood events, where from a photo we can detect whether it is flood-related or not, how deep the water approximately is, whether rescue workers are present, and the level of urbanization in an image. Also we can recognise cattle and varios transportation forms such as motorcycles and cars. This allows users to focus on the data that’s most important for them, listing any of such properties of a flood event in our dashboard.

Level of Detail

We analyse media data at different levels of detail. At a global level we use course media analytics that detect and describe larger events at country and province level. While for national and city level media analyses we have a more fine-grained approach, including also smaller events and georeferencing down to neighbourhood and sometimes even street level.

Another difference is the clasifiers that we use at different levels. For global system we usually promote a high precision, which delivers a low volume of highly accurate data (with a chance that some relevant information may be missing). Whilst in fine-grained solutions we often let recall prevail, delivering more data with a higher chance that irrelevant information is shown sometimes. Depending on the use-case, we tailor the system to your needs.

Level of Detail

We analyse media data at different levels of detail. At a global level we use course media analytics that detect and describe larger events at country and province level. While for national and city level media analyses we have a more fine-grained approach, including also smaller events and georeferencing down to neighbourhood and sometimes even street level.

Another difference is the clasifiers that we use at different levels. For global system we usually promote a high precision, which delivers a low volume of highly accurate data (with a chance that some relevant information may be missing). Whilst in fine-grained solutions we often let recall prevail, delivering more data with a higher chance that irrelevant information is shown sometimes. Depending on the use-case, we tailor the system to your needs.

For weather impact assessment

We started with flood monitoring, but now we extended to any event type that is related to weather impact assessment. This includes:

- Floods, mudflows and landslides

- Heavy precipitation (rain, snow, hail, fog)

- Winds (hurricanes, typhoons)

- Droughts and wildfires

And for each of these perils, as described above, the associated event impact (e.g. water depths), status of prevention measures (e.g. breaches, drainage problems, trash accumulation), status of response measures (e.g. evacuations), perceptions, rumours etc.

Human-in-the-loop

We use machine-learning across our system, for text classification and image processing of the individual tags in our data. Any machine learning algorithm however depends on training data that is annotated by humans, and if there are slight changes in the format of incoming data over time, performance of these algorithms may degrade. We therefore developed a human-in-the-loop system, which enables our users to correct any mistakes made by the algorithms in real-time. These corrections feed back to the algorithms, so they are continuously optimized and keep performing well in an ever changing environment. The human-in-the-loop is implemented and can be used for both our text as well as image classification.